Code generation to the rescue

As you probably have heard by this time ChatGPT a machine learning based chatbot created by OpenAI is very famous. It is based on Generative Pretrained Transformer model, hence, GPT in short. It’s quite successful in various tasks ranging from text summarization, essays generation to questions answering. But did you know that it can also generate working code in various programming languages? If you didn’t then this post will provide you with some ideas to try.

Task at hand

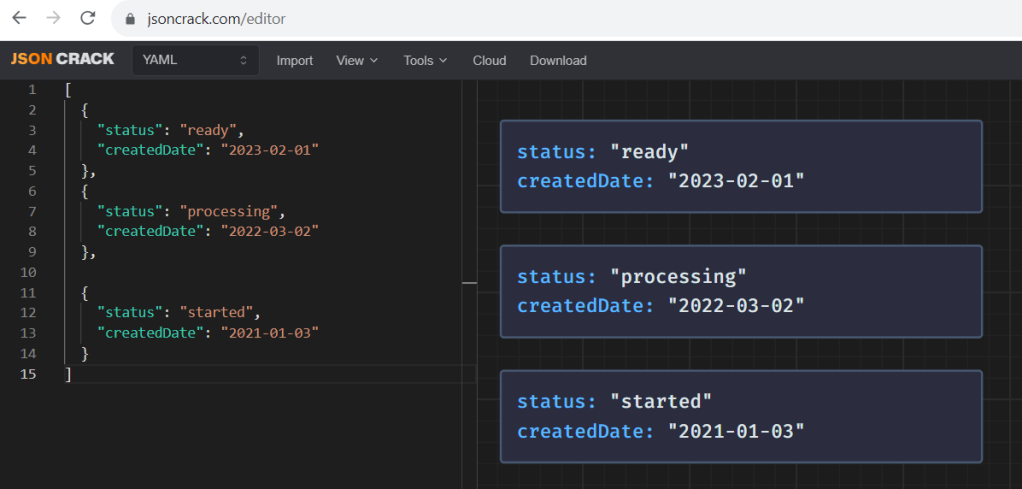

I am a Java developer and recently at work I need to solve a certain task. The task was to sort a list of objects where each object in the list had a date time field. Date time field may look like this: 2023-09-17 20:01:02.23.

For example, in the JSON Crack online tool below you can see how such a list of objects can look like

This task seems quite straightforward when the field is of a Java Date type, but in this case the field was of type String. It means that sorting the objects by this field won’t be correct since the objects will be sorted alphabetically instead of based on date and time. A solution is to convert the String data time field into proper Date type and then to sort the objects.

To summarize what needs to be done is as follows

Iterate over the list of objects and compare objects createdDate field by first converting it from String type to Date type.

Using Java Stream API approach

Java 8 version introduced a powerful Streams API that allows processing of collections in a functional way. For example, it is possible to filter, apply mapping or sorting to a collection in a single line of code using a declarative syntax somewhat similar to SQL. So, accomplishing the task of sorting a list of objects that have a createdDate field of Date type is quite simple and if you google how to do it you’ll find a number of solutions.

For example, this is how it can be done based on the Stack Overflow answer.

List<Order> orders = list.stream().

sorted(comparing(Order::getCreatedDate).

collect(Collectors.toList());

The issue was that I wasn’t quite able to find a solution that exactly would solve the issue I had, even though I searched a lot. Then I had an idea. When I played with ChatGPT at OpenAI’s website I also played with code that ChatGPT generated for various languages. So, I went to Microsoft Edge browser and opened Bing Chatbot, powered by GPT-4, and wrote the following

First prompt

Write a java code to sort a list of objects while converting a string field to date type

and this is the solution that the Bing chatbot provided

Certainly! Here’s an example of a Java code snippet that sorts a list of objects while converting a string field to date type:

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.Collections;

import java.util.Comparator;

import java.util.Date;

import java.util.List;

public class Main {

public static void main(String[] args) {

List<YourObject> yourList = new ArrayList<>();

// Add objects to yourList

// Sort the list based on the string field "dateString"

Collections.sort(yourList, new Comparator<YourObject>() {

@Override

public int compare(YourObject o1, YourObject o2) {

SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd");

try {

Date date1 = dateFormat.parse(o1.getDateString());

Date date2 = dateFormat.parse(o2.getDateString());

return date1.compareTo(date2);

} catch (ParseException e) {

e.printStackTrace();

}

return 0;

}

});

// Print the sorted list

for (YourObject obj : yourList) {

System.out.println(obj);

}

}

}

class YourObject {

private String dateString;

public String getDateString() {

return dateString;

}

public void setDateString(String dateString) {

this.dateString = dateString;

}

// Add other fields and methods as needed

}

In this example, we have a list of objects of type

YourObjectthat contains a string field calleddateString. We want to sort the list based on this field, treating it as a date.To achieve this, we use the

Collections.sort()method and provide a customComparatorimplementation. Inside thecompare()method, we parse thedateStringof each object into aDateobject using aSimpleDateFormat. We then compare the two dates using thecompareTo()method and return the result.Please note that you need to replace

YourObjectwith the actual class name and modify the code according to your specific requirements.Let me know if you need any further assistance!

If you try the example above in a Java online compiler you’ll see that it works right away. Isn’t it amazing? The only issue that I wanted to have this solution using Java Stream API, so I rephrased what I wanted to get.

Second more nuanced prompt

Certainly! Here’s an example of a Java code snippet that sorts a list of objects while converting a string field to date type using the Java Stream API:

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.Comparator;

import java.util.Date;

import java.util.List;

public class Main {

public static void main(String[] args) {

List<YourObject> yourList = new ArrayList<>();

// Add objects to yourList

// Sort the list based on the string field "dateString" using Java Stream API

yourList.sort(Comparator.comparing(obj -> {

SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd");

try {

Date date = dateFormat.parse(obj.getDateString());

return date;

} catch (ParseException e) {

e.printStackTrace();

}

return null;

}));

// Print the sorted list

yourList.forEach(System.out::println);

}

}

class YourObject {

private String dateString;

public String getDateString() {

return dateString;

}

public void setDateString(String dateString) {

this.dateString = dateString;

}

// Add other fields and methods as needed

}

In this example, we have a list of objects of type

YourObjectthat contains a string field calleddateString. We want to sort the list based on this field, treating it as a date.To achieve this using the Java Stream API, we use the

sort()method of theListinterface and provide a lambda expression as the comparator. Inside the lambda expression, we parse thedateStringof each object into aDateobject using aSimpleDateFormat. We then return the parsed date for comparison.Please note that you need to replace

YourObjectwith the actual class name and modify the code according to your specific requirements.Let me know if you need any further assistance!

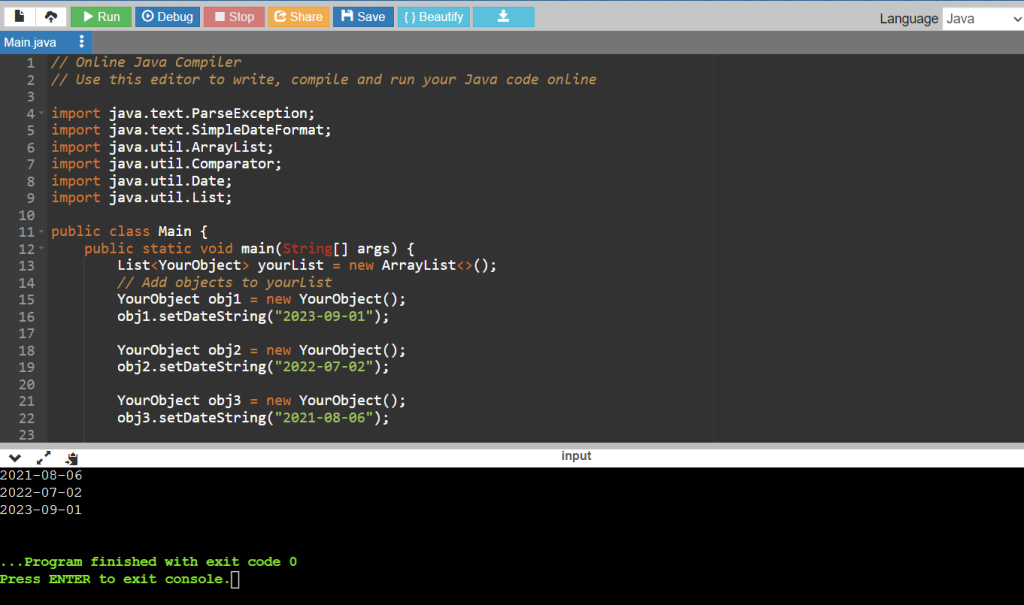

Testing the generated code almost as is in an online Java compiler

Well, that last answer was what I needed. We can take it and check that it can run almost as is in an online Java compiler. I made a couple of small adjustments in the generated code:

- I instantiated three objects with dateString populated.

- I added these objects to a list and

- I adjusted println function to print the dateString field, instead of the object itself.

This is how it looked after running

Below come a full source code if you need it

// Online Java Compiler

// Use this editor to write, compile and run your Java code online

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.ArrayList;

import java.util.Comparator;

import java.util.Date;

import java.util.List;

public class Main {

public static void main(String[] args) {

List<YourObject> yourList = new ArrayList<>();

// Add objects to yourList

YourObject obj1 = new YourObject();

obj1.setDateString("2023-09-01");

YourObject obj2 = new YourObject();

obj2.setDateString("2022-07-02");

YourObject obj3 = new YourObject();

obj3.setDateString("2021-08-06");

yourList.add(obj1);

yourList.add(obj2);

yourList.add(obj3);

// Sort the list based on the string field "dateString" using Java Stream API

yourList.sort(Comparator.comparing(obj -> {

SimpleDateFormat dateFormat = new SimpleDateFormat("yyyy-MM-dd");

try {

Date date = dateFormat.parse(obj.getDateString());

return date;

} catch (ParseException e) {

e.printStackTrace();

}

return null;

}));

// Print the sorted list

yourList.forEach((obj -> System.out.println(obj.getDateString())));

}

}

class YourObject {

private String dateString;

public String getDateString() {

return dateString;

}

public void setDateString(String dateString) {

this.dateString = dateString;

}

// Add other fields and methods as needed

}

Blowing your socks off

So there you have it. If you are trying to find a solution for a coding issue you face and you don’t find a good example at Stack Overflow or elsewhere, try using GPT-4 based chatbot, for example, Bing and see how it goes. You can be amazed.